Detailed Description

A Linear Factor Graph is a factor graph where all factors are Gaussian, i.e.

Factor == GaussianFactor VectorValues = A values structure of vectors Most of the time, linear factor graphs arise by linearizing a non-linear factor graph.

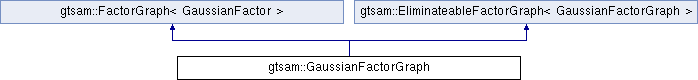

Inheritance diagram for gtsam::GaussianFactorGraph:

Inheritance diagram for gtsam::GaussianFactorGraph:Public Member Functions | |

| GaussianFactorGraph () | |

| Default constructor. | |

| template<typename ITERATOR > | |

| GaussianFactorGraph (ITERATOR firstFactor, ITERATOR lastFactor) | |

| Construct from iterator over factors. | |

| template<class CONTAINER > | |

| GaussianFactorGraph (const CONTAINER &factors) | |

| Construct from container of factors (shared_ptr or plain objects) | |

| template<class DERIVEDFACTOR > | |

| GaussianFactorGraph (const FactorGraph< DERIVEDFACTOR > &graph) | |

| Implicit copy/downcast constructor to override explicit template container constructor. | |

| virtual | ~GaussianFactorGraph () |

| Virtual destructor. | |

| void | add (const GaussianFactor &factor) |

| Add a factor by value - makes a copy. | |

| void | add (const sharedFactor &factor) |

| Add a factor by pointer - stores pointer without copying the factor. | |

| void | add (const Vector &b) |

| Add a null factor. | |

| void | add (Key key1, const Matrix &A1, const Vector &b, const SharedDiagonal &model=SharedDiagonal()) |

| Add a unary factor. | |

| void | add (Key key1, const Matrix &A1, Key key2, const Matrix &A2, const Vector &b, const SharedDiagonal &model=SharedDiagonal()) |

| Add a binary factor. | |

| void | add (Key key1, const Matrix &A1, Key key2, const Matrix &A2, Key key3, const Matrix &A3, const Vector &b, const SharedDiagonal &model=SharedDiagonal()) |

| Add a ternary factor. | |

| template<class TERMS > | |

| void | add (const TERMS &terms, const Vector &b, const SharedDiagonal &model=SharedDiagonal()) |

| Add an n-ary factor. | |

| Keys | keys () const |

| std::map< Key, size_t > | getKeyDimMap () const |

| double | error (const VectorValues &x) const |

| unnormalized error | |

| double | probPrime (const VectorValues &c) const |

| Unnormalized probability. More... | |

| virtual GaussianFactorGraph | clone () const |

| Clone() performs a deep-copy of the graph, including all of the factors. More... | |

| virtual GaussianFactorGraph::shared_ptr | cloneToPtr () const |

| CloneToPtr() performs a simple assignment to a new graph and returns it. More... | |

| GaussianFactorGraph | negate () const |

| Returns the negation of all factors in this graph - corresponds to antifactors. More... | |

Testable | |

| bool | equals (const This &fg, double tol=1e-9) const |

Linear Algebra | |

| std::vector< std::tuple< int, int, double > > | sparseJacobian (const Ordering &ordering, size_t &nrows, size_t &ncols) const |

| Returns a sparse augmented Jacbian matrix as a vector of i, j, and s, where i(k) and j(k) are the base 0 row and column indices, and s(k) is the entry as a double. More... | |

| std::vector< std::tuple< int, int, double > > | sparseJacobian () const |

| Returns a sparse augmented Jacobian matrix with default ordering. | |

| Matrix | sparseJacobian_ () const |

| Matrix version of sparseJacobian: generates a 3*m matrix with [i,j,s] entries such that S(i(k),j(k)) = s(k), which can be given to MATLAB's sparse. More... | |

| Matrix | augmentedJacobian (const Ordering &ordering) const |

| Return a dense \( [ \;A\;b\; ] \in \mathbb{R}^{m \times n+1} \) Jacobian matrix, augmented with b with the noise models baked into A and b. More... | |

| Matrix | augmentedJacobian () const |

| Return a dense \( [ \;A\;b\; ] \in \mathbb{R}^{m \times n+1} \) Jacobian matrix, augmented with b with the noise models baked into A and b. More... | |

| std::pair< Matrix, Vector > | jacobian (const Ordering &ordering) const |

| Return the dense Jacobian \( A \) and right-hand-side \( b \), with the noise models baked into A and b. More... | |

| std::pair< Matrix, Vector > | jacobian () const |

| Return the dense Jacobian \( A \) and right-hand-side \( b \), with the noise models baked into A and b. More... | |

| Matrix | augmentedHessian (const Ordering &ordering) const |

| Return a dense \( \Lambda \in \mathbb{R}^{n+1 \times n+1} \) Hessian matrix, augmented with the information vector \( \eta \). More... | |

| Matrix | augmentedHessian () const |

| Return a dense \( \Lambda \in \mathbb{R}^{n+1 \times n+1} \) Hessian matrix, augmented with the information vector \( \eta \). More... | |

| std::pair< Matrix, Vector > | hessian (const Ordering &ordering) const |

| Return the dense Hessian \( \Lambda \) and information vector \( \eta \), with the noise models baked in. More... | |

| std::pair< Matrix, Vector > | hessian () const |

| Return the dense Hessian \( \Lambda \) and information vector \( \eta \), with the noise models baked in. More... | |

| virtual VectorValues | hessianDiagonal () const |

| Return only the diagonal of the Hessian A'*A, as a VectorValues. | |

| virtual std::map< Key, Matrix > | hessianBlockDiagonal () const |

| Return the block diagonal of the Hessian for this factor. | |

| VectorValues | optimize (const Eliminate &function=EliminationTraitsType::DefaultEliminate) const |

Solve the factor graph by performing multifrontal variable elimination in COLAMD order using the dense elimination function specified in function (default EliminatePreferCholesky), followed by back-substitution in the Bayes tree resulting from elimination. More... | |

| VectorValues | optimize (const Ordering &, const Eliminate &function=EliminationTraitsType::DefaultEliminate) const |

Solve the factor graph by performing multifrontal variable elimination in COLAMD order using the dense elimination function specified in function (default EliminatePreferCholesky), followed by back-substitution in the Bayes tree resulting from elimination. More... | |

| VectorValues | optimizeDensely () const |

| Optimize using Eigen's dense Cholesky factorization. | |

| VectorValues | gradient (const VectorValues &x0) const |

| Compute the gradient of the energy function, \( \nabla_{x=x_0} \left\Vert \Sigma^{-1} A x - b \right\Vert^2 \), centered around \( x = x_0 \). More... | |

| virtual VectorValues | gradientAtZero () const |

| Compute the gradient of the energy function, \( \nabla_{x=0} \left\Vert \Sigma^{-1} A x - b \right\Vert^2 \), centered around zero. More... | |

| VectorValues | optimizeGradientSearch () const |

| Optimize along the gradient direction, with a closed-form computation to perform the line search. More... | |

| VectorValues | transposeMultiply (const Errors &e) const |

| x = A'*e More... | |

| void | transposeMultiplyAdd (double alpha, const Errors &e, VectorValues &x) const |

| x += alpha*A'*e | |

| Errors | gaussianErrors (const VectorValues &x) const |

| return A*x-b | |

| Errors | operator* (const VectorValues &x) const |

| ** return A*x */ | |

| void | multiplyHessianAdd (double alpha, const VectorValues &x, VectorValues &y) const |

| ** y += alpha*A'A*x */ | |

| void | multiplyInPlace (const VectorValues &x, Errors &e) const |

| ** In-place version e <- A*x that overwrites e. */ | |

| void | multiplyInPlace (const VectorValues &x, const Errors::iterator &e) const |

| In-place version e <- A*x that takes an iterator. | |

| void | printErrors (const VectorValues &x, const std::string &str="GaussianFactorGraph: ", const KeyFormatter &keyFormatter=DefaultKeyFormatter, const std::function< bool(const Factor *, double, size_t)> &printCondition=[](const Factor *, double, size_t) { return true;}) const |

Public Member Functions inherited from gtsam::FactorGraph< GaussianFactor > Public Member Functions inherited from gtsam::FactorGraph< GaussianFactor > | |

| virtual | ~FactorGraph ()=default |

| Default destructor. | |

| void | reserve (size_t size) |

| Reserve space for the specified number of factors if you know in advance how many there will be (works like FastVector::reserve). | |

| IsDerived< DERIVEDFACTOR > | push_back (boost::shared_ptr< DERIVEDFACTOR > factor) |

| Add a factor directly using a shared_ptr. | |

| IsDerived< DERIVEDFACTOR > | push_back (const DERIVEDFACTOR &factor) |

| Add a factor by value, will be copy-constructed (use push_back with a shared_ptr to avoid the copy). | |

| IsDerived< DERIVEDFACTOR > | emplace_shared (Args &&... args) |

| Emplace a shared pointer to factor of given type. | |

| IsDerived< DERIVEDFACTOR > | add (boost::shared_ptr< DERIVEDFACTOR > factor) |

add is a synonym for push_back. | |

| std::enable_if< std::is_base_of< FactorType, DERIVEDFACTOR >::value, boost::assign::list_inserter< RefCallPushBack< This > > >::type | operator+= (boost::shared_ptr< DERIVEDFACTOR > factor) |

+= works well with boost::assign list inserter. | |

| HasDerivedElementType< ITERATOR > | push_back (ITERATOR firstFactor, ITERATOR lastFactor) |

| Push back many factors with an iterator over shared_ptr (factors are not copied) | |

| HasDerivedValueType< ITERATOR > | push_back (ITERATOR firstFactor, ITERATOR lastFactor) |

| Push back many factors with an iterator (factors are copied) | |

| HasDerivedElementType< CONTAINER > | push_back (const CONTAINER &container) |

| Push back many factors as shared_ptr's in a container (factors are not copied) | |

| HasDerivedValueType< CONTAINER > | push_back (const CONTAINER &container) |

| Push back non-pointer objects in a container (factors are copied). | |

| void | add (const FACTOR_OR_CONTAINER &factorOrContainer) |

| Add a factor or container of factors, including STL collections, BayesTrees, etc. | |

| boost::assign::list_inserter< CRefCallPushBack< This > > | operator+= (const FACTOR_OR_CONTAINER &factorOrContainer) |

| Add a factor or container of factors, including STL collections, BayesTrees, etc. | |

| std::enable_if< std::is_base_of< This, typenameCLIQUE::FactorGraphType >::value >::type | push_back (const BayesTree< CLIQUE > &bayesTree) |

| Push back a BayesTree as a collection of factors. More... | |

| FactorIndices | add_factors (const CONTAINER &factors, bool useEmptySlots=false) |

| Add new factors to a factor graph and returns a list of new factor indices, optionally finding and reusing empty factor slots. | |

| virtual void | print (const std::string &s="FactorGraph", const KeyFormatter &formatter=DefaultKeyFormatter) const |

| print out graph | |

| bool | equals (const This &fg, double tol=1e-9) const |

| Check equality. | |

| size_t | size () const |

| return the number of factors (including any null factors set by remove() ). | |

| bool | empty () const |

| Check if the graph is empty (null factors set by remove() will cause this to return false). | |

| const sharedFactor | at (size_t i) const |

| Get a specific factor by index (this checks array bounds and may throw an exception, as opposed to operator[] which does not). | |

| sharedFactor & | at (size_t i) |

| Get a specific factor by index (this checks array bounds and may throw an exception, as opposed to operator[] which does not). | |

| const sharedFactor | operator[] (size_t i) const |

| Get a specific factor by index (this does not check array bounds, as opposed to at() which does). | |

| sharedFactor & | operator[] (size_t i) |

| Get a specific factor by index (this does not check array bounds, as opposed to at() which does). | |

| const_iterator | begin () const |

| Iterator to beginning of factors. | |

| const_iterator | end () const |

| Iterator to end of factors. | |

| sharedFactor | front () const |

| Get the first factor. | |

| sharedFactor | back () const |

| Get the last factor. | |

| iterator | begin () |

| non-const STL-style begin() | |

| iterator | end () |

| non-const STL-style end() | |

| void | resize (size_t size) |

| Directly resize the number of factors in the graph. More... | |

| void | remove (size_t i) |

| delete factor without re-arranging indexes by inserting a nullptr pointer | |

| void | replace (size_t index, sharedFactor factor) |

| replace a factor by index | |

| iterator | erase (iterator item) |

| Erase factor and rearrange other factors to take up the empty space. | |

| iterator | erase (iterator first, iterator last) |

| Erase factors and rearrange other factors to take up the empty space. | |

| size_t | nrFactors () const |

| return the number of non-null factors | |

| KeySet | keys () const |

| Potentially slow function to return all keys involved, sorted, as a set. | |

| KeyVector | keyVector () const |

| Potentially slow function to return all keys involved, sorted, as a vector. | |

| bool | exists (size_t idx) const |

| MATLAB interface utility: Checks whether a factor index idx exists in the graph and is a live pointer. | |

Public Member Functions inherited from gtsam::EliminateableFactorGraph< GaussianFactorGraph > Public Member Functions inherited from gtsam::EliminateableFactorGraph< GaussianFactorGraph > | |

| boost::shared_ptr< BayesNetType > | eliminateSequential (OptionalOrderingType orderingType=boost::none, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

| Do sequential elimination of all variables to produce a Bayes net. More... | |

| boost::shared_ptr< BayesNetType > | eliminateSequential (const Ordering &ordering, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

| Do sequential elimination of all variables to produce a Bayes net. More... | |

| boost::shared_ptr< BayesTreeType > | eliminateMultifrontal (OptionalOrderingType orderingType=boost::none, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

| Do multifrontal elimination of all variables to produce a Bayes tree. More... | |

| boost::shared_ptr< BayesTreeType > | eliminateMultifrontal (const Ordering &ordering, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

| Do multifrontal elimination of all variables to produce a Bayes tree. More... | |

| std::pair< boost::shared_ptr< BayesNetType >, boost::shared_ptr< FactorGraphType > > | eliminatePartialSequential (const Ordering &ordering, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

Do sequential elimination of some variables, in ordering provided, to produce a Bayes net and a remaining factor graph. More... | |

| std::pair< boost::shared_ptr< BayesNetType >, boost::shared_ptr< FactorGraphType > > | eliminatePartialSequential (const KeyVector &variables, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

Do sequential elimination of the given variables in an ordering computed by COLAMD to produce a Bayes net and a remaining factor graph. More... | |

| std::pair< boost::shared_ptr< BayesTreeType >, boost::shared_ptr< FactorGraphType > > | eliminatePartialMultifrontal (const Ordering &ordering, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

Do multifrontal elimination of some variables, in ordering provided, to produce a Bayes tree and a remaining factor graph. More... | |

| std::pair< boost::shared_ptr< BayesTreeType >, boost::shared_ptr< FactorGraphType > > | eliminatePartialMultifrontal (const KeyVector &variables, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

Do multifrontal elimination of the given variables in an ordering computed by COLAMD to produce a Bayes net and a remaining factor graph. More... | |

| boost::shared_ptr< BayesNetType > | marginalMultifrontalBayesNet (boost::variant< const Ordering &, const KeyVector & > variables, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

| Compute the marginal of the requested variables and return the result as a Bayes net. More... | |

| boost::shared_ptr< BayesNetType > | marginalMultifrontalBayesNet (boost::variant< const Ordering &, const KeyVector & > variables, const Ordering &marginalizedVariableOrdering, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

| Compute the marginal of the requested variables and return the result as a Bayes net. More... | |

| boost::shared_ptr< BayesTreeType > | marginalMultifrontalBayesTree (boost::variant< const Ordering &, const KeyVector & > variables, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

| Compute the marginal of the requested variables and return the result as a Bayes tree. More... | |

| boost::shared_ptr< BayesTreeType > | marginalMultifrontalBayesTree (boost::variant< const Ordering &, const KeyVector & > variables, const Ordering &marginalizedVariableOrdering, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

| Compute the marginal of the requested variables and return the result as a Bayes tree. More... | |

| boost::shared_ptr< FactorGraphType > | marginal (const KeyVector &variables, const Eliminate &function=EliminationTraitsType::DefaultEliminate, OptionalVariableIndex variableIndex=boost::none) const |

| Compute the marginal factor graph of the requested variables. | |

Public Types | |

| typedef GaussianFactorGraph | This |

| Typedef to this class. | |

| typedef FactorGraph< GaussianFactor > | Base |

| Typedef to base factor graph type. | |

| typedef EliminateableFactorGraph< This > | BaseEliminateable |

| Typedef to base elimination class. | |

| typedef boost::shared_ptr< This > | shared_ptr |

| shared_ptr to this class | |

| typedef KeySet | Keys |

| Return the set of variables involved in the factors (computes a set union). | |

Public Types inherited from gtsam::FactorGraph< GaussianFactor > Public Types inherited from gtsam::FactorGraph< GaussianFactor > | |

| typedef GaussianFactor | FactorType |

| factor type | |

| typedef boost::shared_ptr< GaussianFactor > | sharedFactor |

| Shared pointer to a factor. | |

| typedef sharedFactor | value_type |

| typedef FastVector< sharedFactor >::iterator | iterator |

| typedef FastVector< sharedFactor >::const_iterator | const_iterator |

Public Types inherited from gtsam::EliminateableFactorGraph< GaussianFactorGraph > Public Types inherited from gtsam::EliminateableFactorGraph< GaussianFactorGraph > | |

| typedef EliminationTraits< FactorGraphType > | EliminationTraitsType |

| Typedef to the specific EliminationTraits for this graph. | |

| typedef EliminationTraitsType::ConditionalType | ConditionalType |

| Conditional type stored in the Bayes net produced by elimination. | |

| typedef EliminationTraitsType::BayesNetType | BayesNetType |

| Bayes net type produced by sequential elimination. | |

| typedef EliminationTraitsType::EliminationTreeType | EliminationTreeType |

| Elimination tree type that can do sequential elimination of this graph. | |

| typedef EliminationTraitsType::BayesTreeType | BayesTreeType |

| Bayes tree type produced by multifrontal elimination. | |

| typedef EliminationTraitsType::JunctionTreeType | JunctionTreeType |

| Junction tree type that can do multifrontal elimination of this graph. | |

| typedef std::pair< boost::shared_ptr< ConditionalType >, boost::shared_ptr< _FactorType > > | EliminationResult |

| The pair of conditional and remaining factor produced by a single dense elimination step on a subgraph. | |

| typedef std::function< EliminationResult(const FactorGraphType &, const Ordering &)> | Eliminate |

| The function type that does a single dense elimination step on a subgraph. | |

| typedef boost::optional< const VariableIndex & > | OptionalVariableIndex |

| Typedef for an optional variable index as an argument to elimination functions. | |

| typedef boost::optional< Ordering::OrderingType > | OptionalOrderingType |

| Typedef for an optional ordering type. | |

Friends | |

| class | boost::serialization::access |

| Serialization function. | |

Additional Inherited Members | |

Protected Member Functions inherited from gtsam::FactorGraph< GaussianFactor > Protected Member Functions inherited from gtsam::FactorGraph< GaussianFactor > | |

| FactorGraph () | |

| Default constructor. | |

| FactorGraph (ITERATOR firstFactor, ITERATOR lastFactor) | |

| Constructor from iterator over factors (shared_ptr or plain objects) | |

| FactorGraph (const CONTAINER &factors) | |

| Construct from container of factors (shared_ptr or plain objects) | |

Protected Attributes inherited from gtsam::FactorGraph< GaussianFactor > Protected Attributes inherited from gtsam::FactorGraph< GaussianFactor > | |

| FastVector< sharedFactor > | factors_ |

| concept check, makes sure FACTOR defines print and equals More... | |

Member Function Documentation

◆ augmentedHessian() [1/2]

| Matrix gtsam::GaussianFactorGraph::augmentedHessian | ( | ) | const |

Return a dense \( \Lambda \in \mathbb{R}^{n+1 \times n+1} \) Hessian matrix, augmented with the information vector \( \eta \).

The augmented Hessian is

\[ \left[ \begin{array}{ccc} \Lambda & \eta \\ \eta^T & c \end{array} \right] \]

and the negative log-likelihood is \( \frac{1}{2} x^T \Lambda x + \eta^T x + c \).

◆ augmentedHessian() [2/2]

| Matrix gtsam::GaussianFactorGraph::augmentedHessian | ( | const Ordering & | ordering | ) | const |

Return a dense \( \Lambda \in \mathbb{R}^{n+1 \times n+1} \) Hessian matrix, augmented with the information vector \( \eta \).

The augmented Hessian is

\[ \left[ \begin{array}{ccc} \Lambda & \eta \\ \eta^T & c \end{array} \right] \]

and the negative log-likelihood is \( \frac{1}{2} x^T \Lambda x + \eta^T x + c \).

◆ augmentedJacobian() [1/2]

| Matrix gtsam::GaussianFactorGraph::augmentedJacobian | ( | ) | const |

Return a dense \( [ \;A\;b\; ] \in \mathbb{R}^{m \times n+1} \) Jacobian matrix, augmented with b with the noise models baked into A and b.

The negative log-likelihood is \( \frac{1}{2} \Vert Ax-b \Vert^2 \). See also GaussianFactorGraph::jacobian and GaussianFactorGraph::sparseJacobian.

◆ augmentedJacobian() [2/2]

| Matrix gtsam::GaussianFactorGraph::augmentedJacobian | ( | const Ordering & | ordering | ) | const |

Return a dense \( [ \;A\;b\; ] \in \mathbb{R}^{m \times n+1} \) Jacobian matrix, augmented with b with the noise models baked into A and b.

The negative log-likelihood is \( \frac{1}{2} \Vert Ax-b \Vert^2 \). See also GaussianFactorGraph::jacobian and GaussianFactorGraph::sparseJacobian.

◆ clone()

|

virtual |

Clone() performs a deep-copy of the graph, including all of the factors.

Cloning preserves null factors so indices for the original graph are still valid for the cloned graph.

◆ cloneToPtr()

|

virtual |

CloneToPtr() performs a simple assignment to a new graph and returns it.

There is no preservation of null factors!

◆ gradient()

| VectorValues gtsam::GaussianFactorGraph::gradient | ( | const VectorValues & | x0 | ) | const |

Compute the gradient of the energy function, \( \nabla_{x=x_0} \left\Vert \Sigma^{-1} A x - b \right\Vert^2 \), centered around \( x = x_0 \).

The gradient is \( A^T(Ax-b) \).

- Parameters

-

fg The Jacobian factor graph $(A,b)$ x0 The center about which to compute the gradient

- Returns

- The gradient as a VectorValues

◆ gradientAtZero()

|

virtual |

Compute the gradient of the energy function, \( \nabla_{x=0} \left\Vert \Sigma^{-1} A x - b \right\Vert^2 \), centered around zero.

The gradient is \( A^T(Ax-b) \).

- Parameters

-

fg The Jacobian factor graph $(A,b)$ [output] g A VectorValues to store the gradient, which must be preallocated, see allocateVectorValues

- Returns

- The gradient as a VectorValues

◆ hessian() [1/2]

| pair< Matrix, Vector > gtsam::GaussianFactorGraph::hessian | ( | ) | const |

Return the dense Hessian \( \Lambda \) and information vector \( \eta \), with the noise models baked in.

The negative log-likelihood is \frac{1}{2} x^T \Lambda x + \eta^T x + c. See also GaussianFactorGraph::augmentedHessian.

◆ hessian() [2/2]

| pair< Matrix, Vector > gtsam::GaussianFactorGraph::hessian | ( | const Ordering & | ordering | ) | const |

Return the dense Hessian \( \Lambda \) and information vector \( \eta \), with the noise models baked in.

The negative log-likelihood is \frac{1}{2} x^T \Lambda x + \eta^T x + c. See also GaussianFactorGraph::augmentedHessian.

◆ jacobian() [1/2]

| pair< Matrix, Vector > gtsam::GaussianFactorGraph::jacobian | ( | ) | const |

Return the dense Jacobian \( A \) and right-hand-side \( b \), with the noise models baked into A and b.

The negative log-likelihood is \( \frac{1}{2} \Vert Ax-b \Vert^2 \). See also GaussianFactorGraph::augmentedJacobian and GaussianFactorGraph::sparseJacobian.

◆ jacobian() [2/2]

| pair< Matrix, Vector > gtsam::GaussianFactorGraph::jacobian | ( | const Ordering & | ordering | ) | const |

Return the dense Jacobian \( A \) and right-hand-side \( b \), with the noise models baked into A and b.

The negative log-likelihood is \( \frac{1}{2} \Vert Ax-b \Vert^2 \). See also GaussianFactorGraph::augmentedJacobian and GaussianFactorGraph::sparseJacobian.

◆ negate()

| GaussianFactorGraph gtsam::GaussianFactorGraph::negate | ( | ) | const |

Returns the negation of all factors in this graph - corresponds to antifactors.

Will convert all factors to HessianFactors due to negation of information. Cloning preserves null factors so indices for the original graph are still valid for the cloned graph.

◆ optimize() [1/2]

| VectorValues gtsam::GaussianFactorGraph::optimize | ( | const Eliminate & | function = EliminationTraitsType::DefaultEliminate | ) | const |

Solve the factor graph by performing multifrontal variable elimination in COLAMD order using the dense elimination function specified in function (default EliminatePreferCholesky), followed by back-substitution in the Bayes tree resulting from elimination.

Is equivalent to calling graph.eliminateMultifrontal()->optimize().

◆ optimize() [2/2]

| VectorValues gtsam::GaussianFactorGraph::optimize | ( | const Ordering & | ordering, |

| const Eliminate & | function = EliminationTraitsType::DefaultEliminate |

||

| ) | const |

Solve the factor graph by performing multifrontal variable elimination in COLAMD order using the dense elimination function specified in function (default EliminatePreferCholesky), followed by back-substitution in the Bayes tree resulting from elimination.

Is equivalent to calling graph.eliminateMultifrontal()->optimize().

◆ optimizeGradientSearch()

| VectorValues gtsam::GaussianFactorGraph::optimizeGradientSearch | ( | ) | const |

Optimize along the gradient direction, with a closed-form computation to perform the line search.

The gradient is computed about \( \delta x=0 \).

This function returns \( \delta x \) that minimizes a reparametrized problem. The error function of a GaussianBayesNet is

\[ f(\delta x) = \frac{1}{2} |R \delta x - d|^2 = \frac{1}{2}d^T d - d^T R \delta x + \frac{1}{2} \delta x^T R^T R \delta x \]

with gradient and Hessian

\[ g(\delta x) = R^T(R\delta x - d), \qquad G(\delta x) = R^T R. \]

This function performs the line search in the direction of the gradient evaluated at \( g = g(\delta x = 0) \) with step size \( \alpha \) that minimizes \( f(\delta x = \alpha g) \):

\[ f(\alpha) = \frac{1}{2} d^T d + g^T \delta x + \frac{1}{2} \alpha^2 g^T G g \]

Optimizing by setting the derivative to zero yields \( \hat \alpha = (-g^T g) / (g^T G g) \). For efficiency, this function evaluates the denominator without computing the Hessian \( G \), returning

\[ \delta x = \hat\alpha g = \frac{-g^T g}{(R g)^T(R g)} \]

◆ probPrime()

|

inline |

Unnormalized probability.

O(n)

◆ sparseJacobian()

| SparseTriplets gtsam::GaussianFactorGraph::sparseJacobian | ( | const Ordering & | ordering, |

| size_t & | nrows, | ||

| size_t & | ncols | ||

| ) | const |

Returns a sparse augmented Jacbian matrix as a vector of i, j, and s, where i(k) and j(k) are the base 0 row and column indices, and s(k) is the entry as a double.

The standard deviations are baked into A and b

- Returns

- the sparse matrix as a std::vector of std::tuples

- Parameters

-

ordering the column ordering [out] nrows The number of rows in the augmented Jacobian [out] ncols The number of columns in the augmented Jacobian

◆ sparseJacobian_()

| Matrix gtsam::GaussianFactorGraph::sparseJacobian_ | ( | ) | const |

Matrix version of sparseJacobian: generates a 3*m matrix with [i,j,s] entries such that S(i(k),j(k)) = s(k), which can be given to MATLAB's sparse.

Note: i, j are 1-indexed. The standard deviations are baked into A and b

◆ transposeMultiply()

| VectorValues gtsam::GaussianFactorGraph::transposeMultiply | ( | const Errors & | e | ) | const |

x = A'*e

- ************************************************************************* */* ************************************************************************* */

The documentation for this class was generated from the following files:

- /Users/dellaert/git/github/gtsam/linear/GaussianFactorGraph.h

- /Users/dellaert/git/github/gtsam/linear/GaussianFactorGraph.cpp