Detailed Description

A Bayes net made from linear-Gaussian densities.

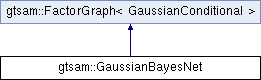

Inheritance diagram for gtsam::GaussianBayesNet:

Inheritance diagram for gtsam::GaussianBayesNet:Public Member Functions | |

Standard Constructors | |

| GaussianBayesNet () | |

| Construct empty factor graph. | |

| template<typename ITERATOR > | |

| GaussianBayesNet (ITERATOR firstConditional, ITERATOR lastConditional) | |

| Construct from iterator over conditionals. | |

| template<class CONTAINER > | |

| GaussianBayesNet (const CONTAINER &conditionals) | |

| Construct from container of factors (shared_ptr or plain objects) | |

| template<class DERIVEDCONDITIONAL > | |

| GaussianBayesNet (const FactorGraph< DERIVEDCONDITIONAL > &graph) | |

| Implicit copy/downcast constructor to override explicit template container constructor. | |

| virtual | ~GaussianBayesNet () |

| Destructor. | |

Testable | |

| bool | equals (const This &bn, double tol=1e-9) const |

| Check equality. | |

Standard Interface | |

| VectorValues | optimize () const |

| Solve the GaussianBayesNet, i.e. return \( x = R^{-1}*d \), by back-substitution. | |

| VectorValues | optimize (const VectorValues &solutionForMissing) const |

| Version of optimize for incomplete BayesNet, needs solution for missing variables. More... | |

| Ordering | ordering () const |

| Return ordering corresponding to a topological sort. More... | |

Linear Algebra | |

| std::pair< Matrix, Vector > | matrix (const Ordering &ordering) const |

| Return (dense) upper-triangular matrix representation Will return upper-triangular matrix only when using 'ordering' above. More... | |

| std::pair< Matrix, Vector > | matrix () const |

| Return (dense) upper-triangular matrix representation Will return upper-triangular matrix only when using 'ordering' above. More... | |

| VectorValues | optimizeGradientSearch () const |

| Optimize along the gradient direction, with a closed-form computation to perform the line search. More... | |

| VectorValues | gradient (const VectorValues &x0) const |

| Compute the gradient of the energy function, \( \nabla_{x=x_0} \left\Vert \Sigma^{-1} R x - d \right\Vert^2 \), centered around \( x = x_0 \). More... | |

| VectorValues | gradientAtZero () const |

| Compute the gradient of the energy function, \( \nabla_{x=0} \left\Vert \Sigma^{-1} R x - d \right\Vert^2 \), centered around zero. More... | |

| double | error (const VectorValues &x) const |

| 0.5 * sum of squared Mahalanobis distances. | |

| double | determinant () const |

| Computes the determinant of a GassianBayesNet. More... | |

| double | logDeterminant () const |

| Computes the log of the determinant of a GassianBayesNet. More... | |

| VectorValues | backSubstitute (const VectorValues &gx) const |

| Backsubstitute with a different RHS vector than the one stored in this BayesNet. More... | |

| VectorValues | backSubstituteTranspose (const VectorValues &gx) const |

| Transpose backsubstitute with a different RHS vector than the one stored in this BayesNet. More... | |

| void | print (const std::string &s="", const KeyFormatter &formatter=DefaultKeyFormatter) const override |

| print graph More... | |

| void | saveGraph (const std::string &s, const KeyFormatter &keyFormatter=DefaultKeyFormatter) const |

| Save the GaussianBayesNet as an image. More... | |

Public Member Functions inherited from gtsam::FactorGraph< GaussianConditional > Public Member Functions inherited from gtsam::FactorGraph< GaussianConditional > | |

| virtual | ~FactorGraph ()=default |

| Default destructor. | |

| void | reserve (size_t size) |

| Reserve space for the specified number of factors if you know in advance how many there will be (works like FastVector::reserve). | |

| IsDerived< DERIVEDFACTOR > | push_back (boost::shared_ptr< DERIVEDFACTOR > factor) |

| Add a factor directly using a shared_ptr. | |

| IsDerived< DERIVEDFACTOR > | push_back (const DERIVEDFACTOR &factor) |

| Add a factor by value, will be copy-constructed (use push_back with a shared_ptr to avoid the copy). | |

| IsDerived< DERIVEDFACTOR > | emplace_shared (Args &&... args) |

| Emplace a shared pointer to factor of given type. | |

| IsDerived< DERIVEDFACTOR > | add (boost::shared_ptr< DERIVEDFACTOR > factor) |

add is a synonym for push_back. | |

| std::enable_if< std::is_base_of< FactorType, DERIVEDFACTOR >::value, boost::assign::list_inserter< RefCallPushBack< This > > >::type | operator+= (boost::shared_ptr< DERIVEDFACTOR > factor) |

+= works well with boost::assign list inserter. | |

| HasDerivedElementType< ITERATOR > | push_back (ITERATOR firstFactor, ITERATOR lastFactor) |

| Push back many factors with an iterator over shared_ptr (factors are not copied) | |

| HasDerivedValueType< ITERATOR > | push_back (ITERATOR firstFactor, ITERATOR lastFactor) |

| Push back many factors with an iterator (factors are copied) | |

| HasDerivedElementType< CONTAINER > | push_back (const CONTAINER &container) |

| Push back many factors as shared_ptr's in a container (factors are not copied) | |

| HasDerivedValueType< CONTAINER > | push_back (const CONTAINER &container) |

| Push back non-pointer objects in a container (factors are copied). | |

| void | add (const FACTOR_OR_CONTAINER &factorOrContainer) |

| Add a factor or container of factors, including STL collections, BayesTrees, etc. | |

| boost::assign::list_inserter< CRefCallPushBack< This > > | operator+= (const FACTOR_OR_CONTAINER &factorOrContainer) |

| Add a factor or container of factors, including STL collections, BayesTrees, etc. | |

| std::enable_if< std::is_base_of< This, typenameCLIQUE::FactorGraphType >::value >::type | push_back (const BayesTree< CLIQUE > &bayesTree) |

| Push back a BayesTree as a collection of factors. More... | |

| FactorIndices | add_factors (const CONTAINER &factors, bool useEmptySlots=false) |

| Add new factors to a factor graph and returns a list of new factor indices, optionally finding and reusing empty factor slots. | |

| bool | equals (const This &fg, double tol=1e-9) const |

| Check equality. | |

| size_t | size () const |

| return the number of factors (including any null factors set by remove() ). | |

| bool | empty () const |

| Check if the graph is empty (null factors set by remove() will cause this to return false). | |

| const sharedFactor | at (size_t i) const |

| Get a specific factor by index (this checks array bounds and may throw an exception, as opposed to operator[] which does not). | |

| sharedFactor & | at (size_t i) |

| Get a specific factor by index (this checks array bounds and may throw an exception, as opposed to operator[] which does not). | |

| const sharedFactor | operator[] (size_t i) const |

| Get a specific factor by index (this does not check array bounds, as opposed to at() which does). | |

| sharedFactor & | operator[] (size_t i) |

| Get a specific factor by index (this does not check array bounds, as opposed to at() which does). | |

| const_iterator | begin () const |

| Iterator to beginning of factors. | |

| const_iterator | end () const |

| Iterator to end of factors. | |

| sharedFactor | front () const |

| Get the first factor. | |

| sharedFactor | back () const |

| Get the last factor. | |

| iterator | begin () |

| non-const STL-style begin() | |

| iterator | end () |

| non-const STL-style end() | |

| void | resize (size_t size) |

| Directly resize the number of factors in the graph. More... | |

| void | remove (size_t i) |

| delete factor without re-arranging indexes by inserting a nullptr pointer | |

| void | replace (size_t index, sharedFactor factor) |

| replace a factor by index | |

| iterator | erase (iterator item) |

| Erase factor and rearrange other factors to take up the empty space. | |

| iterator | erase (iterator first, iterator last) |

| Erase factors and rearrange other factors to take up the empty space. | |

| size_t | nrFactors () const |

| return the number of non-null factors | |

| KeySet | keys () const |

| Potentially slow function to return all keys involved, sorted, as a set. | |

| KeyVector | keyVector () const |

| Potentially slow function to return all keys involved, sorted, as a vector. | |

| bool | exists (size_t idx) const |

| MATLAB interface utility: Checks whether a factor index idx exists in the graph and is a live pointer. | |

Public Types | |

| typedef FactorGraph< GaussianConditional > | Base |

| typedef GaussianBayesNet | This |

| typedef GaussianConditional | ConditionalType |

| typedef boost::shared_ptr< This > | shared_ptr |

| typedef boost::shared_ptr< ConditionalType > | sharedConditional |

Public Types inherited from gtsam::FactorGraph< GaussianConditional > Public Types inherited from gtsam::FactorGraph< GaussianConditional > | |

| typedef GaussianConditional | FactorType |

| factor type | |

| typedef boost::shared_ptr< GaussianConditional > | sharedFactor |

| Shared pointer to a factor. | |

| typedef sharedFactor | value_type |

| typedef FastVector< sharedFactor >::iterator | iterator |

| typedef FastVector< sharedFactor >::const_iterator | const_iterator |

Friends | |

| class | boost::serialization::access |

| Serialization function. | |

Additional Inherited Members | |

Protected Member Functions inherited from gtsam::FactorGraph< GaussianConditional > Protected Member Functions inherited from gtsam::FactorGraph< GaussianConditional > | |

| FactorGraph () | |

| Default constructor. | |

| FactorGraph (ITERATOR firstFactor, ITERATOR lastFactor) | |

| Constructor from iterator over factors (shared_ptr or plain objects) | |

| FactorGraph (const CONTAINER &factors) | |

| Construct from container of factors (shared_ptr or plain objects) | |

Protected Attributes inherited from gtsam::FactorGraph< GaussianConditional > Protected Attributes inherited from gtsam::FactorGraph< GaussianConditional > | |

| FastVector< sharedFactor > | factors_ |

| concept check, makes sure FACTOR defines print and equals More... | |

Member Function Documentation

◆ backSubstitute()

| VectorValues gtsam::GaussianBayesNet::backSubstitute | ( | const VectorValues & | gx | ) | const |

Backsubstitute with a different RHS vector than the one stored in this BayesNet.

gy=inv(R*inv(Sigma))*gx

◆ backSubstituteTranspose()

| VectorValues gtsam::GaussianBayesNet::backSubstituteTranspose | ( | const VectorValues & | gx | ) | const |

Transpose backsubstitute with a different RHS vector than the one stored in this BayesNet.

gy=inv(L)*gx by solving L*gy=gx. gy=inv(R'*inv(Sigma))*gx gz'*R'=gx', gy = gz.*sigmas

◆ determinant()

| double gtsam::GaussianBayesNet::determinant | ( | ) | const |

Computes the determinant of a GassianBayesNet.

A GaussianBayesNet is an upper triangular matrix and for an upper triangular matrix determinant is the product of the diagonal elements. Instead of actually multiplying we add the logarithms of the diagonal elements and take the exponent at the end because this is more numerically stable.

- Parameters

-

bayesNet The input GaussianBayesNet

- Returns

- The determinant

- ************************************************************************* */* ************************************************************************* */

◆ gradient()

| VectorValues gtsam::GaussianBayesNet::gradient | ( | const VectorValues & | x0 | ) | const |

Compute the gradient of the energy function, \( \nabla_{x=x_0} \left\Vert \Sigma^{-1} R x - d \right\Vert^2 \), centered around \( x = x_0 \).

The gradient is \( R^T(Rx-d) \).

- Parameters

-

x0 The center about which to compute the gradient

- Returns

- The gradient as a VectorValues

◆ gradientAtZero()

| VectorValues gtsam::GaussianBayesNet::gradientAtZero | ( | ) | const |

Compute the gradient of the energy function, \( \nabla_{x=0} \left\Vert \Sigma^{-1} R x - d \right\Vert^2 \), centered around zero.

The gradient about zero is \( -R^T d \). See also gradient(const GaussianBayesNet&, const VectorValues&).

- Parameters

-

[output] g A VectorValues to store the gradient, which must be preallocated, see allocateVectorValues

◆ logDeterminant()

| double gtsam::GaussianBayesNet::logDeterminant | ( | ) | const |

Computes the log of the determinant of a GassianBayesNet.

A GaussianBayesNet is an upper triangular matrix and for an upper triangular matrix determinant is the product of the diagonal elements.

- Parameters

-

bayesNet The input GaussianBayesNet

- Returns

- The determinant

◆ matrix() [1/2]

| pair< Matrix, Vector > gtsam::GaussianBayesNet::matrix | ( | ) | const |

Return (dense) upper-triangular matrix representation Will return upper-triangular matrix only when using 'ordering' above.

In case Bayes net is incomplete zero columns are added to the end.

◆ matrix() [2/2]

| pair< Matrix, Vector > gtsam::GaussianBayesNet::matrix | ( | const Ordering & | ordering | ) | const |

Return (dense) upper-triangular matrix representation Will return upper-triangular matrix only when using 'ordering' above.

In case Bayes net is incomplete zero columns are added to the end.

◆ optimize()

| VectorValues gtsam::GaussianBayesNet::optimize | ( | const VectorValues & | solutionForMissing | ) | const |

Version of optimize for incomplete BayesNet, needs solution for missing variables.

solve each node in turn in topological sort order (parents first)

◆ optimizeGradientSearch()

| VectorValues gtsam::GaussianBayesNet::optimizeGradientSearch | ( | ) | const |

Optimize along the gradient direction, with a closed-form computation to perform the line search.

The gradient is computed about \( \delta x=0 \).

This function returns \( \delta x \) that minimizes a reparametrized problem. The error function of a GaussianBayesNet is

\[ f(\delta x) = \frac{1}{2} |R \delta x - d|^2 = \frac{1}{2}d^T d - d^T R \delta x + \frac{1}{2} \delta x^T R^T R \delta x \]

with gradient and Hessian

\[ g(\delta x) = R^T(R\delta x - d), \qquad G(\delta x) = R^T R. \]

This function performs the line search in the direction of the gradient evaluated at \( g = g(\delta x = 0) \) with step size \( \alpha \) that minimizes \( f(\delta x = \alpha g) \):

\[ f(\alpha) = \frac{1}{2} d^T d + g^T \delta x + \frac{1}{2} \alpha^2 g^T G g \]

Optimizing by setting the derivative to zero yields \( \hat \alpha = (-g^T g) / (g^T G g) \). For efficiency, this function evaluates the denominator without computing the Hessian \( G \), returning

\[ \delta x = \hat\alpha g = \frac{-g^T g}{(R g)^T(R g)} \]

◆ ordering()

| Ordering gtsam::GaussianBayesNet::ordering | ( | ) | const |

Return ordering corresponding to a topological sort.

There are many topological sorts of a Bayes net. This one corresponds to the one that makes 'matrix' below upper-triangular. In case Bayes net is incomplete any non-frontal are added to the end.

- ************************************************************************* */

◆ print()

|

inlineoverridevirtual |

print graph

Reimplemented from gtsam::FactorGraph< GaussianConditional >.

◆ saveGraph()

| void gtsam::GaussianBayesNet::saveGraph | ( | const std::string & | s, |

| const KeyFormatter & | keyFormatter = DefaultKeyFormatter |

||

| ) | const |

Save the GaussianBayesNet as an image.

Requires dot to be installed.

- Parameters

-

s The name of the figure. keyFormatter Formatter to use for styling keys in the graph.

The documentation for this class was generated from the following files:

- /Users/dellaert/git/github/gtsam/linear/GaussianBayesNet.h

- /Users/dellaert/git/github/gtsam/linear/GaussianBayesNet.cpp